[2020-11-02: There is a newer version of this post]

As I wrote about installing ZFS with the native encryption on the Ubuntu 20.04, it got me thinking... Should I abandon my LUKS-setup and switch? Well, I guess some performance testing was in order.

For this purpose I decided to go with the Ubuntu Server (to minimize impact desktop environment might have) inside of the 2 CPU Virtual Machine with 24 GB of RAM. Two CPUs should be enough to show any multithreading performance difference while 24 GB of RAM is there to give home to our ZFS disks. I didn't want to depend on disk speed and variation it gives. For the testing purpose I only care about the relative speed difference and using the RAM instead of the real disks would give more repeatable results.

For OS I used Ubuntu Server with ZFS packages, carved a chunk of memory for RAM disks, and limited ZFS ARC to 1G.

Terminalsudo -i << EOF

apt update

apt dist-upgrade -y

apt install -y zfsutils-linux

grep "/ramdisk" /etc/fstab || echo "tmpfs /ramdisk tmpfs rw,size=20G 0 0" \

| sudo tee -a /etc/fstab

grep "zfs_arc_max" /etc/modprobe.d/zfs.conf || echo "options zfs zfs_arc_max=1073741824" \

| sudo tee /etc/modprobe.d/zfs.conf

reboot

EOF

With the system in pristine state, I created data used for testing (random 2 GiB).

Terminaldd if=/dev/urandom of=/ramdisk/data.bin bs=1M count=2048

Data disks are just bunch of zeros (3 GB each) and the (RAID-Z2) ZFS pool has the usual stuff but with compression turned off and sync set to always in order to minimize their impact on the results.

Terminalfor I in {1..6}; do dd if=/dev/zero of=/ramdisk/disk$I.bin bs=1MB count=3000; done

echo "12345678" | zpool create -o ashift=12 -O normalization=formD \

-O acltype=posixacl -O xattr=sa -O dnodesize=auto -O atime=off \

-O encryption=aes-256-gcm -O keylocation=prompt -O keyformat=passphrase \

-O compression=off -O sync=always -O mountpoint=/zfs TestPool raidz2 \

/ramdisk/disk1.bin /ramdisk/disk2.bin /ramdisk/disk3.bin \

/ramdisk/disk4.bin /ramdisk/disk5.bin /ramdisk/disk6.bin

To get write speed, I simply copied the data file multiple times and took the time reported by dd. To get a single figure, I removed the highest and the lowest value averaging the rest.

Terminalsudo -i << EOF

sudo dd if=/ramdisk/data.bin of=/zfs/data1.bin bs=1M

sudo dd if=/ramdisk/data.bin of=/zfs/data2.bin bs=1M

sudo dd if=/ramdisk/data.bin of=/zfs/data3.bin bs=1M

sudo dd if=/ramdisk/data.bin of=/zfs/data4.bin bs=1M

sudo dd if=/ramdisk/data.bin of=/zfs/data5.bin bs=1M

EOF

For reads I took the file that was written and dumped it to /dev/null. Averaging procedure was the same as for writes.

Terminalsudo -i << EOF

sudo dd if=/zfs/data1.bin of=/dev/null bs=1M

sudo dd if=/zfs/data2.bin of=/dev/null bs=1M

sudo dd if=/zfs/data3.bin of=/dev/null bs=1M

sudo dd if=/zfs/data4.bin of=/dev/null bs=1M

sudo dd if=/zfs/data5.bin of=/dev/null bs=1M

EOF

With all that completed, I had my results.

With all that completed, I had my results.

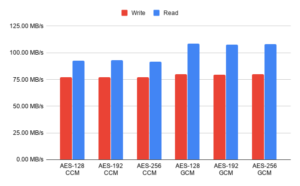

I was quite surprised how close a different bit sizes were in the performance. If your processor supports AES instruction set, there is no reason not to go with 256 bits. Only when you have an older processor without the encryption support does the 128-bit crypto make sense. There was a 15% difference when it comes to the read speeds in the favor of the GCM mode so I would probably go with that as my cipher of choice.

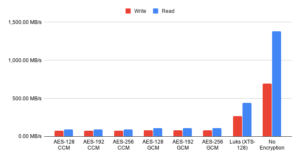

However, once I added measurements without the encryption and for the LUKS-based crypto I was shocked. I expected thing to go faster without the encryption but I didn't expect such a huge difference. Also surprising was seeing the LUKS encryption to have triple the performance of the native one.

Now, this test is not completely fair. In the real life, with a more powerful machine, and on the proper disks you won't see such a huge difference. The

Now, this test is not completely fair. In the real life, with a more powerful machine, and on the proper disks you won't see such a huge difference. The sync=always setting is a performance killer and results in more encryption calls than you would normally see. However, you will still see some difference and good old LUKS seems like the winner here. It's faster out of box, it will use less CPU, and it will encrypt all the data (not leaving metadata in the plain as ZFS does).

I will also admit that comparison leans toward apples-to-oranges kind. Reason to use ZFS' native encryption is not due to its performance but due to the extra benefits it brings. Part of those extra cycles go into the authentication of each written block using a strong MAC. Leaving metadata unencrypted does leak a bit of (meta)data but it also enables send/receive without either side even being decrypted - just ideal for a backup box in the untrusted environment. You can backup the data without ever needing to enter password on the remote side. Lastly let's not forget allowing ZFS direct access to the physical drives allows it to shine when it comes to the fault detection and handling of the same. You will not get anything similar if you are interfacing over the virtual device.

Personally, I will continue using the LUKS-based full disk encryption for my desktop machines. It's just much faster. And I probably won't touch my servers for now either. But I have a feeling that really soon I might give native ZFS encryption a spin.

[2020-11-01: Newer updates of 0.8.3 (0.8.3-1ubuntu12.4) have greatly improved GCM speed. With those optimizations GCM mode is now faster than Luks. For more details check 20.10 post.]

PS: You can take a peek at the raw data if you're so inclined.

ZFS crypto is particularly bad for VMs. It causes extra context switches out to the hyper visor because of details of how it is implemented.

Plans are afoot to fix this, but you should consider comparing with real hardware.

I’m curious if ZoL can use AES-NI. On illumos it cannot for reasons relating to floating point limitations in the kernel. (We have a proprietary FIPS 140-2 crypto provider at RackTop that does not have these limitations.)

True on VM behavior. I do plan to check it on the real hardware in the next month or so but only on i3 as I don’t expect to have any server hardware available to play with.

Considering how many blocks have to be updated and that ZFS is just 2x slower than LUKS, I would assume it uses AES-NI. Otherwise I would expect much worse results. Frankly 50% performance as compared to LUKS is nothing to frown about and will only get better with time.

What version of ZFS did you use? There were significant performance improvements for GCM encryption in 0.8.4.

This test was done on 0.8.3. I will check 0.8.4 a bit later too. Thanks for info!

Here’s my results for 10 GB sequential writes:

0.8.3 (apt install zfsutils-linux): 240 MB/s

0.8.4 (compiled from Github): 740 MB/s

Pretty substantial difference.

Currently, in 2020, if I’d just read this article and not the comments, I’d rather go with LUKS over native encryption, and I’d be wrong.

With https://github.com/openzfs/zfs/pull/9749 merged, you can expect even more with ZFS 2.0 than with 0.8.4, which is already way faster than the 0.8.3 you tested. (throughput x12 for large (128KiB) blocks from what it says)

I think i’d would be useful for readers to edit the article with tests run against a recent version of ZFS, or at least to add a heading that clarifies this point.

Numbers are what numbers are. Yes, there are improvements with ZFS 0.8.4 but most mainstream Linux distributions are still using ZFS 0.8.3 (e.g. Ubuntu).

I did see quite an uptick when I manually compiled 0.8.4 but it was to cumbersome for me to keep it. Maybe I’m just a pussy but I am not going to install any ZFS version on my daily driver if it’s not in repo.

On the other hand, I have it on my todo list to repeat the same tests with Ubuntu 20.10. That one should come with 0.8.4 out of box. I’ll add update to the top of the article then.

If it’s worth anything, I am actually using ZFS’ native encryption myself on both my desktop and NAS server. On server with spinning drives I don’t even see the difference. On desktop it’s most noticeable when transferring large files and it’s definitely impacting speed – a lot. However, outside of the large copy operations I don’t really see much negatives.

My shitty 5400rpm raidz2 does 350 MB/s read/write with ZFS encryption. Thought that was a lot lower with 0.8.3

Hi,

Thank you for this blog post, very useful for me and for others.

I am really looking forward to your 20.10 update, any idea when that might come out.

I’m currently researching how I might be able to benchmark encryption performance myself but I think its a bit over my head. I currently have 20.10 with ZFS native encryption installed on my laptop, but am not sure how to test it.

It would also be interesting to compare against ZFS+LUKS or BTRFS+LUKS

Update will come the next month. I plan to do the testing this weekend if all stars align. :)

Great work and writeup, many thanks.

Any update re: Ubuntu 20.10 and ZFS 0.8.4+? It appears 20.10 was released Oct 22.

I got the new performance measurements done over the weekend. And it’s good! :)