With Ubuntu 20.04 Desktop there is a (still experimental) ZFS setup option in the addition to long time manual ZFS installation option. For Ubuntu Server we're still dependent on the manual steps.

With Ubuntu 20.04 Desktop there is a (still experimental) ZFS setup option in the addition to long time manual ZFS installation option. For Ubuntu Server we're still dependent on the manual steps.

Steps here follow my 19.10 server guide but without the encryption steps. While I normally love having encryption enabled, there are situations where it gets in the way. Most notable example is a machine which you cannot access remotely to enter encryption key.

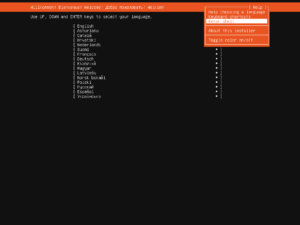

To start with installation we need to get to the root prompt. Just find Enter Shell behind Help menu item (Shift+Tab comes in handy) and you're there.

The very first step is setting up a few variables - disk, pool, host name, and user name. This way we can use them going forward and avoid accidental mistakes. Make sure to replace these values with the ones appropriate for your system. It's a good idea to use something unique for the pool name (e.g. host name). I personally also like having pool name start with uppercase but there is no real rule here.

TerminalDISK=/dev/disk/by-id/ata_disk

POOL=Ubuntu

HOST=server

USER=user

To start the fun we need debootstrap and zfsutils-linux package. Unlike desktop installation, ZFS package is not installed by default.

Terminalapt install --yes debootstrap zfsutils-linux

General idea of my disk setup is to maximize amount of space available for pool with the minimum of supporting partitions. If you are planning to have multiple kernels, increasing boot partition size might be a good idea. Major change as compared to my previous guide is partition numbering. While having partition layout different than partition order had its advantages, a lot of partition editing tools would simply "correct" the partition order to match layout and thus cause issues down the road.

Terminalblkdiscard $DISK

sgdisk --zap-all $DISK

sgdisk -n1:1M:+127M -t1:EF00 -c1:EFI $DISK

sgdisk -n2:0:+512M -t2:8300 -c2:Boot $DISK

sgdisk -n3:0:0 -t3:8309 -c3:Ubuntu $DISK

sgdisk --print $DISK

Now we're ready to create system ZFS pool.

Terminalzpool create -o ashift=12 -O compression=lz4 -O normalization=formD \

-O acltype=posixacl -O xattr=sa -O dnodesize=auto -O atime=off \

-O canmount=off -O mountpoint=none -R /mnt/install $POOL $DISK-part3

zfs create -o canmount=noauto -o mountpoint=/ $POOL/root

zfs mount $POOL/root

Assuming UEFI boot, two additional partitions are needed. One for EFI and one for booting. Unlike what you get with the official guide, here I don't have ZFS pool for boot partition but a plain old ext4. I find potential fixup works better that way and there is a better boot compatibility. If you are thinking about mirroring, making it bigger and ZFS might be a good idea. For a single disk, ext4 will do.

Terminalyes | mkfs.ext4 $DISK-part2

mkdir /mnt/install/boot

mount $DISK-part2 /mnt/install/boot/

mkfs.msdos -F 32 -n EFI $DISK-part1

mkdir /mnt/install/boot/efi

mount $DISK-part1 /mnt/install/boot/efi

Bootstrapping Ubuntu on the newly created pool is next. As we're dealing with server you can consider using --variant=minbase rather than the full Debian system. I personally don't see much value in that as other packages get installed as dependencies anyhow. In any case, this will take a while.

Terminaldebootstrap focal /mnt/install/

zfs set devices=off $POOL

Our newly copied system is lacking a few files and we should make sure they exist before proceeding.

Terminalecho $HOST > /mnt/install/etc/hostname

sed '/cdrom/d' /etc/apt/sources.list > /mnt/install/etc/apt/sources.list

cp /etc/netplan/*.yaml /mnt/install/etc/netplan/

Finally we're ready to "chroot" into our new system.

Terminalmount --rbind /dev /mnt/install/dev

mount --rbind /proc /mnt/install/proc

mount --rbind /sys /mnt/install/sys

chroot /mnt/install /usr/bin/env DISK=$DISK POOL=$POOL USER=$USER bash --login

Let's not forget to setup locale and time zone. If you opted for minbase you can either skip this step or manually install locales and tzdata packages.

Terminallocale-gen --purge "en_US.UTF-8"

update-locale LANG=en_US.UTF-8 LANGUAGE=en_US

dpkg-reconfigure --frontend noninteractive locales

dpkg-reconfigure tzdata

Now we're ready to onboard the latest Linux image.

Terminalapt update

apt install --yes --no-install-recommends linux-image-generic linux-headers-generic

Followed by boot environment packages.

Terminalapt install --yes zfs-initramfs grub-efi-amd64-signed shim-signed

To mount EFI and boot partitions, we need to do some fstab setup too:

Terminalecho "PARTUUID=$(blkid -s PARTUUID -o value $DISK-part2) \

/boot ext4 noatime,nofail,x-systemd.device-timeout=1 0 1" >> /etc/fstab

echo "PARTUUID=$(blkid -s PARTUUID -o value $DISK-part1) \

/boot/efi vfat noatime,nofail,x-systemd.device-timeout=1 0 1" >> /etc/fstab

cat /etc/fstab

Now we get grub started and update our boot environment. Due to Ubuntu 19.10 having some kernel version kerfuffle, we need to manually create initramfs image. As before, boot cryptsetup discovery errors during mkinitramfs and update-initramfs as OK.

TerminalKERNEL=`ls /usr/lib/modules/ | cut -d/ -f1 | sed 's/linux-image-//'`

update-initramfs -u -k $KERNEL

Grub update is what makes EFI tick.

Terminalupdate-grub

grub-install --target=x86_64-efi --efi-directory=/boot/efi --bootloader-id=Ubuntu \

--recheck --no-floppy

Since we're dealing with computer that will most probably be used without screen, it makes sense to install OpenSSH Server.

Terminalapt install --yes openssh-server

I also prefer to allow remote root login. Yes, you can create a sudo user and have root unreachable but that's just swapping one security issue for another. Root user secured with key is plenty safe.

Terminalsed -i '/^#PermitRootLogin/s/^.//' /etc/ssh/sshd_config

mkdir /root/.ssh

echo "<mykey>" >> /root/.ssh/authorized_keys

chmod 644 /root/.ssh/authorized_keys

If you're willing to deal with passwords, you can allow them too by changing both PasswordAuthentication and PermitRootLogin parameter. I personally don't do this.

Terminalsed -i '/^#PasswordAuthentication yes/s/^.//' /etc/ssh/sshd_config

sed -i '/^#PermitRootLogin/s/^.//' /etc/ssh/sshd_config

sed -i 's/^PermitRootLogin prohibit-password/PermitRootLogin yes/' /etc/ssh/sshd_config

passwd

Short package upgrade will not hurt.

Terminalapt dist-upgrade --yes

We can omit creation of the swap dataset but I personally find its good to have it just in case.

Terminalzfs create -V 4G -b $(getconf PAGESIZE) -o compression=off -o logbias=throughput \

-o sync=always -o primarycache=metadata -o secondarycache=none $POOL/swap

mkswap -f /dev/zvol/$POOL/swap

echo "/dev/zvol/$POOL/swap none swap defaults 0 0" >> /etc/fstab

echo RESUME=none > /etc/initramfs-tools/conf.d/resume

If one is so inclined, /home directory can get a separate dataset too.

Terminalrmdir /home

zfs create -o mountpoint=/home $POOL/home

And now we create the user and assign a few extra groups to it.

Terminaladduser --disabled-password --gecos '' $USER

usermod -a -G adm,cdrom,dip,plugdev,sudo $USER

chown -R $USER:$USER /home/$USER

passwd $USER

Consider enabling firewall. While you can go wild with firewall rules, I like to keep them simple to start with. All outgoing traffic is allowed while incoming traffic is limited to new SSH connections and responses to the already established ones.

Terminalapt install --yes man iptables iptables-persistent

iptables -F

iptables -X

iptables -Z

iptables -P INPUT DROP

iptables -P FORWARD DROP

iptables -P OUTPUT ACCEPT

iptables -A INPUT -i lo -j ACCEPT

iptables -A INPUT -m conntrack --ctstate ESTABLISHED,RELATED -j ACCEPT

iptables -A INPUT -p tcp --dport 22 -j ACCEPT

iptables -A INPUT -p icmp -j ACCEPT

iptables -A INPUT -p ipv6-icmp -j ACCEPT

netfilter-persistent save

As install is ready, we can exit our chroot environment.

Terminalexit

And cleanup our mount points.

Terminalumount /mnt/install/boot/efi

umount /mnt/install/boot

mount | grep -v zfs | tac | awk '/\/mnt/ {print $3}' | xargs -i{} umount -lf {}

zpool export -a

After the reboot you should be able to enjoy your installation.

Terminalreboot

Hi,

Very nice guide, THX!

I’ve got one question.

In most (?) of your zfs tutorial, You call the main pool “Ubuntu” is there a reason for such generic name?

some tools use it explicitly?

From my experience with LVS I prefer to have “$HOST” in the pool name, very useful if you have to mount the drive in some other machine, this way you avoid names collision.

This is just a generic name – it can be literally anything (ok, within reason :)). I quite often use host name too albeit not necessarily to deal with name collisions but to avoid accidental pool operations on the wrong machine.

I added clarification in text that all four initial elements are configurable.

I’ve assume it is like you wrote, but I wasn’t sure about grub or something like.

I’m using this setup for few weeks now and everything works grate, except during install I’ve accidentally put something in `/home/`, before mounting proper datapoin, and I haven’t realize that until few day later, and it finished with some temporary mounting, kilint X, copying, files to temp_mount and starting X again.

since then, this setup works like a charm,

once more thanks for the guide, nice way to learn not only about ZFS but also how os installation works.