I am a creature of habit. Long time ago I found ZFS setup that works for me and didn't change much since. But sometime I wonder if those settings still hold with SSDs in game. Most notably, are 4K blocks still the best?

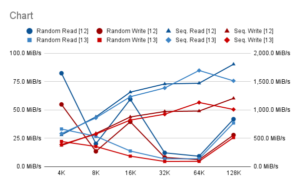

Since I already "had" to update my desktop to Ubuntu 21.10, I used that opportunity to clear my disks and have it installed from scratch. And it would be a shame not to run some tests first on my XPG SX6000 Pro - SSD I use for pure data storage. After trimming this DRAM-less SSD, I tested the pool across multiple recordsize values and at ashift values of 12 (4K block) and 13 (8K block).

My goal was finding a good default settings for both bulk storage and virtual machines. Unfortunately, those are quite opposite requirements. Bulk storage benefits greatly from good sequential access while virtual machines love random IO more. Fortunately, with ZFS, one can accomplish both using two datasets with different recordsize values. But ashift value has to be the same.

Due to erase block sizes getting larger and larger, I expected performance to be better with 8K "sectors" (ashift=13) than what I usually used (ashift=12). But I was surprised.

Due to erase block sizes getting larger and larger, I expected performance to be better with 8K "sectors" (ashift=13) than what I usually used (ashift=12). But I was surprised.

First of all, results were all over the place but it seems that ashift=12 is still a valid starting point. It might be due to my SSD having smaller than expected erase page but I doubt it. My thoughts go more toward SSDs being optimized for the 4K load. And the specific SSD I used to test with is DRAM-less thus allowing any such optimizations to be even more visible.

Optimizations are probably also the reason for 128K performing so well in the random IO scenarios. Yes, for sequential access you would expect it, but for random access it makes no sense how fast it is. No matter what's happening, it's definitely making recordsize=128K still the best general choice. Regardless, for VMs, I created a sub-dataset with much smaller 4K records (and compression off) just to lower write-amplification a bit.

The full test results are in Google Sheets. For testing I used fio's fio-rand-RW.fio and fio-seq-RW.fio profiles.